Innovation Trends

All sections of this page are still in development.

Major changes have taken place in cement plants over the last 170 years. These highly inter-related changes have been under four broad headings:

Improved product performance stands at the top of the list because it was demand for such an improvement that brought Portland cement into being. It was driven by market demand. The second and third in the list were driven by manufacturing cost considerations, and the fourth until now mainly by legislation. With the advent of carbon taxes and emissions trading, the fourth also becomes a cost issue.

The early history of the industry, at least in Britain, saw little advancement in these areas. Performance on large plants in 1895 was not much different from that of tiny plants fifty years earlier, and in some respects, particularly energy, was worse. However, as the cement industry developed abroad, the need to compete with the major producer on quality and price led to these factors being considered seriously by overseas producers. 1895 marks the dawning of a new innovative age in the British industry when it was finally realized that it was being overtaken and robbed of its markets by producers in the USA, Germany, France and many other countries. Not only were export markets being lost, but imported cement started to appear in Britain in quantity.

Product Performance

The improvement in performance of cement almost invariably involves a deterioration in output for a given plant, and increase in energy usage. Trends in improvement have occurred on two major fronts:

- Increase in the “ultimate strength” of the cement

- Increase in the rate of strength development.

The ultimate strength is the strength developed after an infinitely long period of curing. The chemical constitution of clinker has changed over the years in that the amount of alite has increased at the expense of belite content. The ultimate strength potential of clinker has therefore, if anything, diminished because alite has a lower ultimate strength than belite. However, the main factor influencing ultimate strength is the degree to which the clinker is ground. Often quoted is the “seven micron rule”: hydration (and therefore strength development) of cement takes place on the surface of cement particles, gradually penetrating them as hydration proceeds. The initially rapid reaction slows down due to the difficulty of transporting reactants through the increasingly thick layer of hydrate reaction product, until the rate of reaction becomes minimal when the penetration is around 7 μm depth. This means that particles above 14 μm in diameter always leave an unreacted core, and of a 50 μm diameter particle, 37% would never react.

The grinding of early cements was limited by the available flat-stone technology, and a good cement in 1890 would contain 40% by mass of particles over 50 μm (and 5% over 200 μm) and would leave 30% of its mass unreacted. This is of benefit at least to industrial archaeologists, since early concrete contains a substantial amount of unreacted particles, and the mineralogy of the early clinker can be assessed from these. For the user, the unreacted material is nothing but a very expensive aggregate. In a more modern context, it is important that the high energy-usage and environmental cost of clinker is used effectively.

The introduction of the ball mill allowed finer grinding, although with increased power consumption, and the addition of air separators, returning the coarser fractions of the product to the mill for further grinding, allowed conversion of nearly all the clinker into reactable particles. Modern (from 1990) high-efficiency separators and roller mills allow more-or-less complete conversion of clinker into particles of reactable size.

The rate of strength development is controlled by two main factors:

- The alite/belite ratio of the clinker, since alite hydrates much faster than belite

- The fineness of the cement – a finer cement presents more surface area on which the hydration reaction can take place.

Over the years, the alite content of clinkers has risen steadily towards the theoretical maximum, where nearly all the silica is present as alite. This has been achieved by a corresponding increase in the calcium oxide content of the clinker. There are two energy penalties for this – to increase clinker calcium oxide content, more calcium carbonate has to be endothermically decomposed, and a higher temperature is required to complete the reactions in the burning zone.

As a rule of thumb, the strength of concrete at 24 hours age is proportional to the specific surface area of the cement. There has been a steady increase in specific surface area of cement throughout the twentieth century. This is achieved by simply reducing the feed rate to the milling system. Ball mills have a fairly constant power consumption irrespective of feed rate, so a reduced feed rate means an increased energy usage per tonne of product. In ball mills, as a rule of thumb, the energy requirement is proportional to the cement specific surface area to the power 1.5. From around 1990, the trend towards higher grinding energy has been reversed by the partial or total replacement of ball mills with more efficient equipment such as roller mills and hydraulic presses.

Productivity

In 1900, a relatively simple and efficient plant like Johnson’s was making up to 1100 tonnes per week with a workforce of 300. About 100 of these were involved in the operation of the 45 static kilns. Labour productivity was a much greater concern in the USA where wage rates were considerably higher than in Britain, and this was a major driver in the development there of the rotary kiln. The new technology was seen by the bigger manufacturers in Britain as a means to obtain a decisive cost advantage. Once installed, rotary kilns initiated a drive for continuous productivity improvement because of the need to pay off their high capital cost. There was therefore a drive to increase the size and output of kilns without a concomitant increase in the workforce, and to increase the degree of automation of the process. The developments leading to the present day situation are described in the following charts.

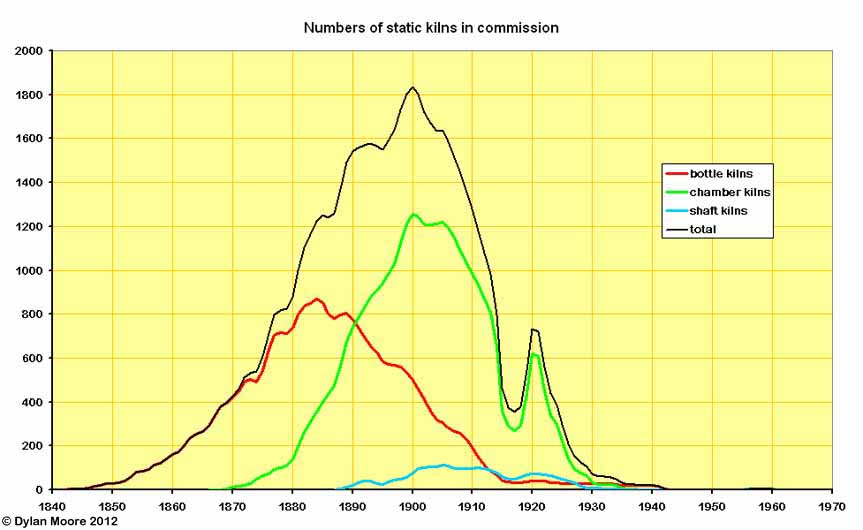

This mainly shows the change in form of batch kilns from bottle to chamber format. Various forms of chamber kiln rose to dominance in the 1880s. Less obvious here is the arrival of continuous kilns from 1890 onwards: the latter had 4-10 times the output of batch kilns. The peak in 1900 corresponds precisely with the introduction of rotary kilns, although a few small independent companies continued to commission new chamber kilns into the 1920s. The small increase in static kiln numbers 1919-1920 was due to the re-commissioning of old kilns as a panic measure due to the brief post-war boom. The last batch kilns to operate were bottle kilns in the Board's plants in the isolated Bridgwater area, at Dunball and Spinx. There was a brief revival of continuous shaft kilns in the 1950s, at Plymstock and South Ferriby.

This mainly shows the change in form of batch kilns from bottle to chamber format. Various forms of chamber kiln rose to dominance in the 1880s. Less obvious here is the arrival of continuous kilns from 1890 onwards: the latter had 4-10 times the output of batch kilns. The peak in 1900 corresponds precisely with the introduction of rotary kilns, although a few small independent companies continued to commission new chamber kilns into the 1920s. The small increase in static kiln numbers 1919-1920 was due to the re-commissioning of old kilns as a panic measure due to the brief post-war boom. The last batch kilns to operate were bottle kilns in the Board's plants in the isolated Bridgwater area, at Dunball and Spinx. There was a brief revival of continuous shaft kilns in the 1950s, at Plymstock and South Ferriby.

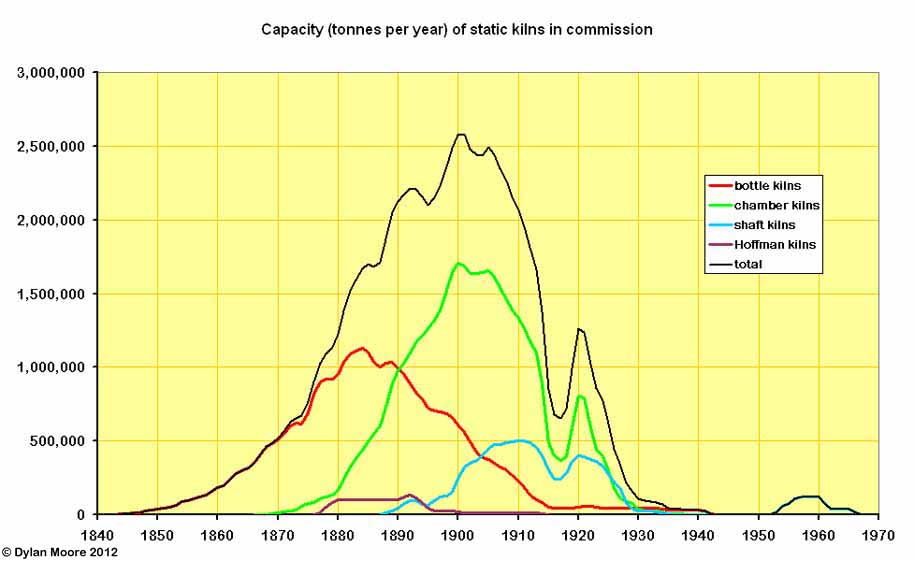

The capacity chart shows the contribution of semi-continuous or continuous kilns. Hoffman kilns were used to a limited extent during 1880-1900. Shaft kilns of various sorts began in the form of Dietzsch kilns in the 1890s. Various "straight-through" shaft designs were tried, and there was a considerable surge with the arrival of the Schneider kiln in 1900. Also visible is their limited revival in the form of "black meal" kilns in the 1950s.

The capacity chart shows the contribution of semi-continuous or continuous kilns. Hoffman kilns were used to a limited extent during 1880-1900. Shaft kilns of various sorts began in the form of Dietzsch kilns in the 1890s. Various "straight-through" shaft designs were tried, and there was a considerable surge with the arrival of the Schneider kiln in 1900. Also visible is their limited revival in the form of "black meal" kilns in the 1950s.

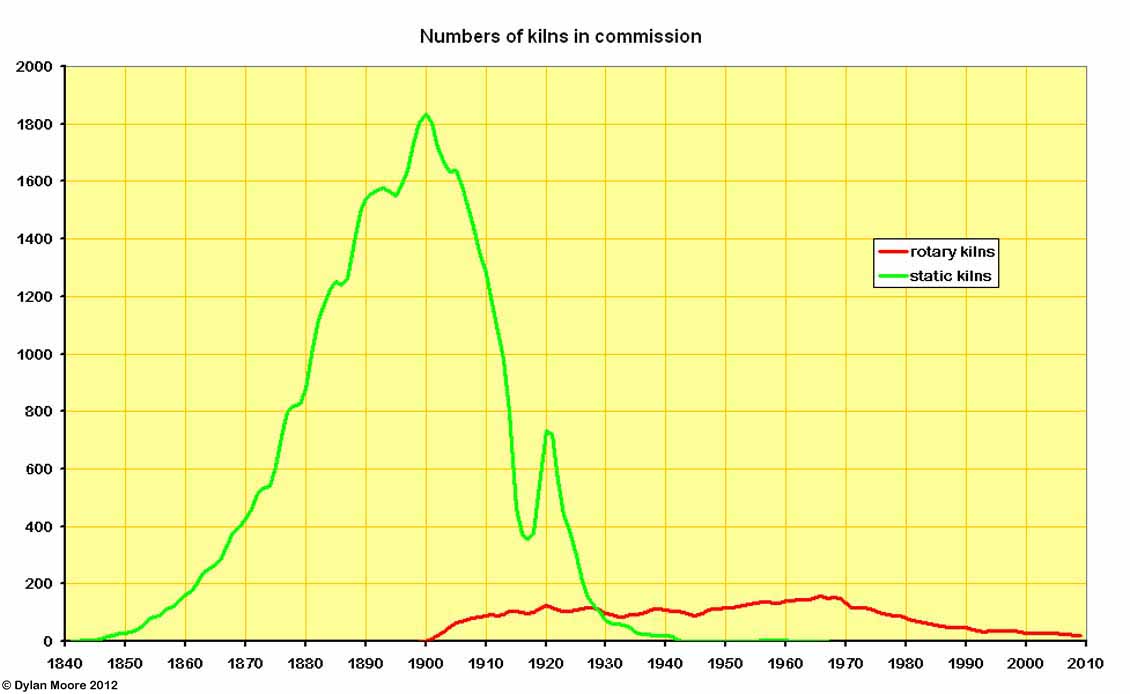

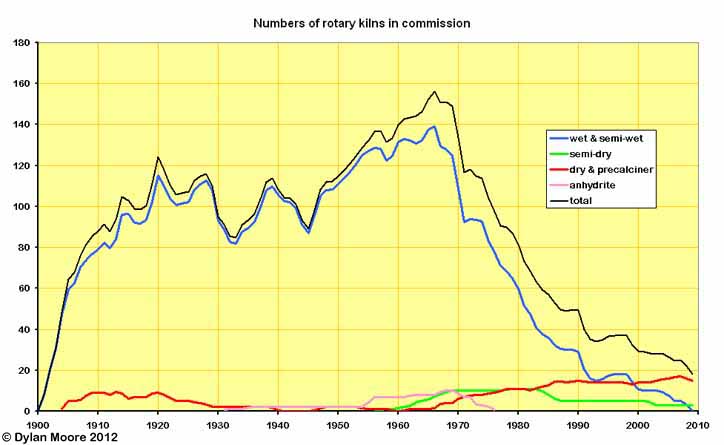

Here we see the prompt rise of rotary kilns in the first decade of the twentieth century, rapidly displacing static kilns.

Here we see the prompt rise of rotary kilns in the first decade of the twentieth century, rapidly displacing static kilns.

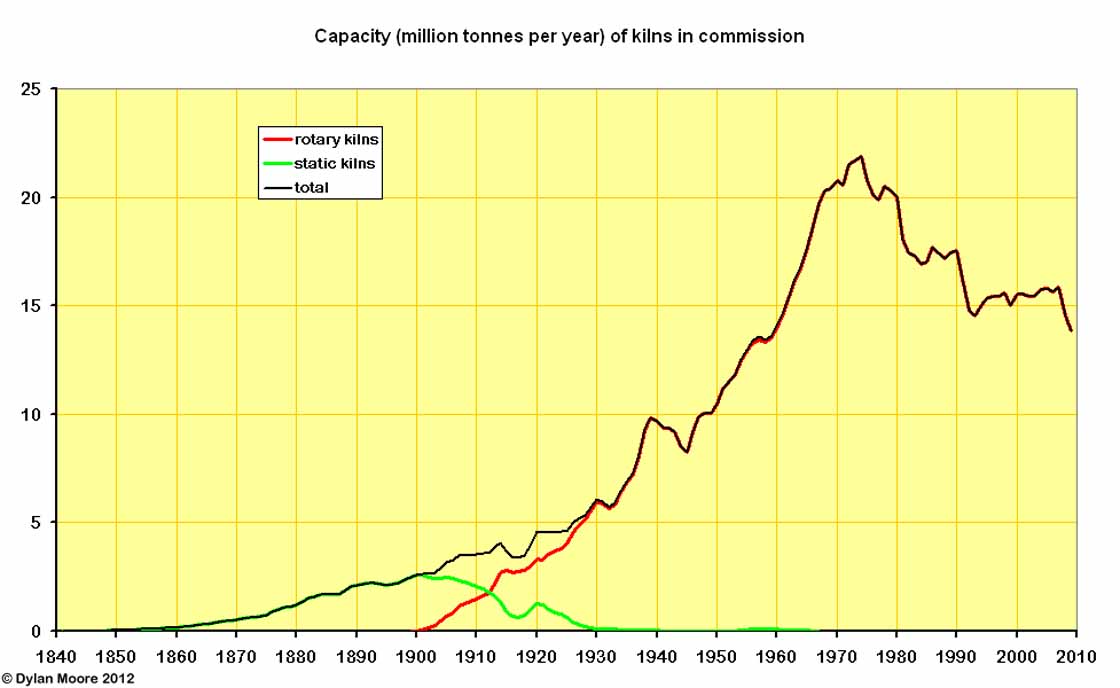

Even the earliest rotary kilns had five times the output of a batch kiln, and the size of rotary kilns grew rapidly, so fewer than 100 rotary kilns were already exceeding static kiln production by 1912.

Even the earliest rotary kilns had five times the output of a batch kiln, and the size of rotary kilns grew rapidly, so fewer than 100 rotary kilns were already exceeding static kiln production by 1912.

Dry process kilns were well represented in the early years, but faded away from 1920 onwards. Semi-dry process (first in Germany 1927: first in Britain 1957) began the tentative moves towards efficiency after WWII, and dry process suspension preheaters followed (first in Germany 1953: first in Britain 1961). As recently as 1991, more than half the kilns in commission were wet and semi-wet process. These are now all gone - clearly the only effective means of forcing out obsolete technology is economic slump.

Dry process kilns were well represented in the early years, but faded away from 1920 onwards. Semi-dry process (first in Germany 1927: first in Britain 1957) began the tentative moves towards efficiency after WWII, and dry process suspension preheaters followed (first in Germany 1953: first in Britain 1961). As recently as 1991, more than half the kilns in commission were wet and semi-wet process. These are now all gone - clearly the only effective means of forcing out obsolete technology is economic slump.

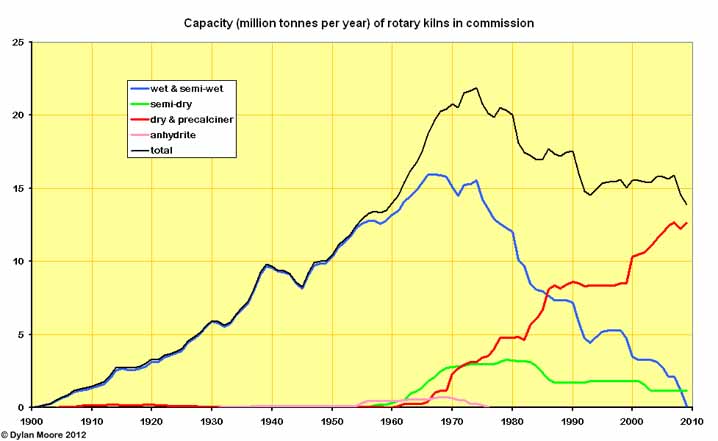

Here we see the generally much larger suspension preheater kilns very gradually displacing the other technologies, driven as much as anything by the economies of scale - i.e. reduced labour costs.

Here we see the generally much larger suspension preheater kilns very gradually displacing the other technologies, driven as much as anything by the economies of scale - i.e. reduced labour costs.

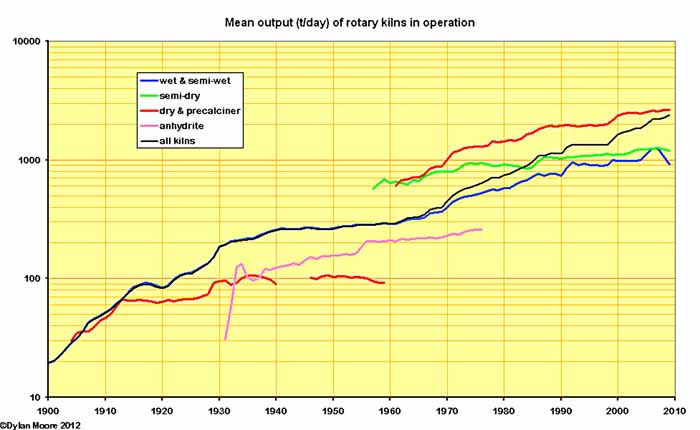

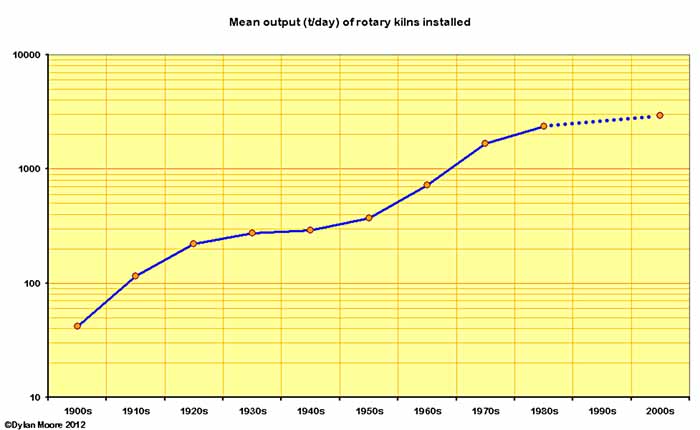

A similar picture emerges in the evolution of kiln output. Early dry process kilns did not evolve, and wet process kilns, having initially advanced rapidly, slowed their advance in the 1930s. Post-war, kiln sizes again increased, and approaching the limit in output of wet process, further development consisted of change to the generally more productive dry process kilns.

A similar picture emerges in the evolution of kiln output. Early dry process kilns did not evolve, and wet process kilns, having initially advanced rapidly, slowed their advance in the 1930s. Post-war, kiln sizes again increased, and approaching the limit in output of wet process, further development consisted of change to the generally more productive dry process kilns.

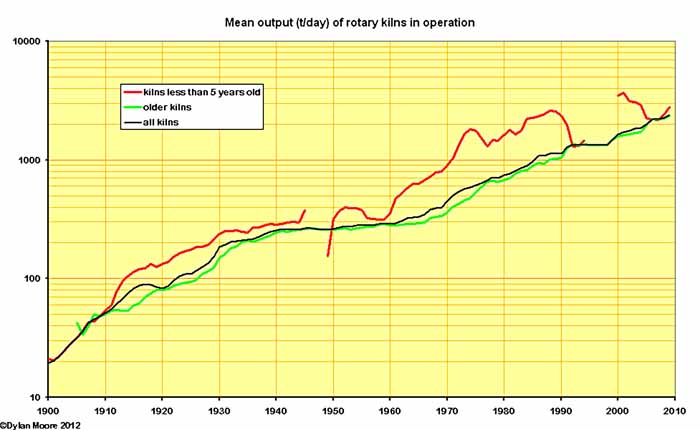

In the above few charts, it becomes clear that the evolution of kiln performance is controlled not only by the rate of installation of new technology, but also the rate of abandonment of the old. Here the mean kiln output is shown alongside the performance of the newer kilns of the period. Most striking is the period 1960-1990 in which the retention of old technology caused a considerable lag in performance compared with the best available. Here we have the fundamental problem with a mature technology: the capital cost of new, more efficient, technology, because of its increasing complexity, rises continually. The pay-back rate on the investment is small and so the equipment has to be operated long after its obsolescence in order to finance the next investment round. In these circumstances, rapid technological catch-up is only possible in an expanding market.

In the above few charts, it becomes clear that the evolution of kiln performance is controlled not only by the rate of installation of new technology, but also the rate of abandonment of the old. Here the mean kiln output is shown alongside the performance of the newer kilns of the period. Most striking is the period 1960-1990 in which the retention of old technology caused a considerable lag in performance compared with the best available. Here we have the fundamental problem with a mature technology: the capital cost of new, more efficient, technology, because of its increasing complexity, rises continually. The pay-back rate on the investment is small and so the equipment has to be operated long after its obsolescence in order to finance the next investment round. In these circumstances, rapid technological catch-up is only possible in an expanding market.

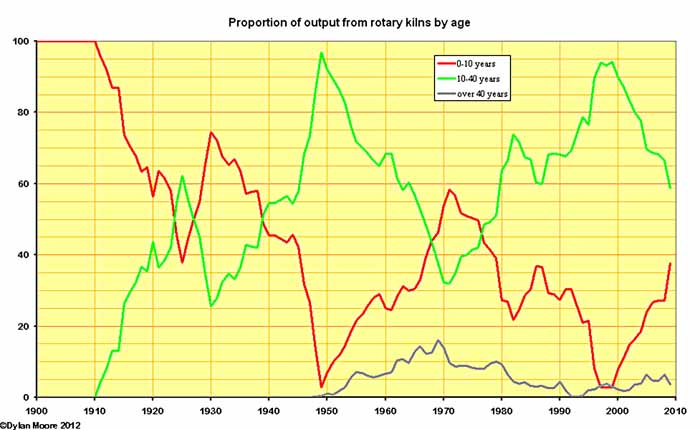

Here we see the effect of fluctuating levels of investment on the age of rotary kilns in service. In principle, the early, simple rotary kilns could continue in operation, fully depreciated, for many decades. Some kilns installed in the first decade were still in operation in the mid 1970s. The simple pattern is for new, hopefully more efficient plant to be installed during market booms, and for the older, less efficient plant to be shut down during the down-turns.

Here we see the effect of fluctuating levels of investment on the age of rotary kilns in service. In principle, the early, simple rotary kilns could continue in operation, fully depreciated, for many decades. Some kilns installed in the first decade were still in operation in the mid 1970s. The simple pattern is for new, hopefully more efficient plant to be installed during market booms, and for the older, less efficient plant to be shut down during the down-turns.

Another way of looking at the same phenomenon is the mean age of kilns in commission. On the face of it, the situation following the 1960s boom appears quite good, but much of the new capacity installed was obsolete technology. An apparent 40-year investment cycle failed to repeat in the UK after 1980 in a declining market, and age of plant reaches record levels.

Another way of looking at the same phenomenon is the mean age of kilns in commission. On the face of it, the situation following the 1960s boom appears quite good, but much of the new capacity installed was obsolete technology. An apparent 40-year investment cycle failed to repeat in the UK after 1980 in a declining market, and age of plant reaches record levels.

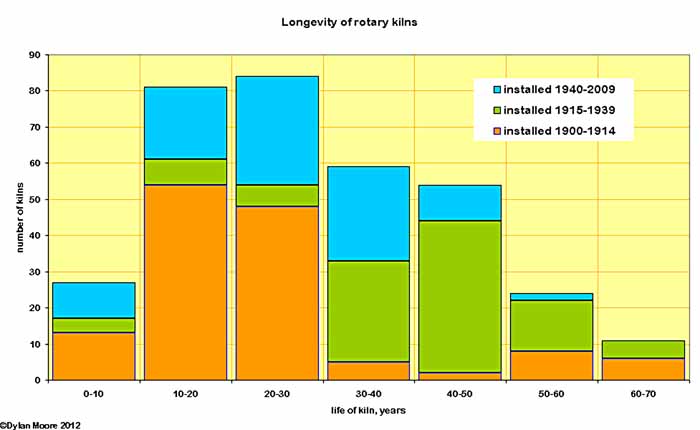

The longevity of kilns is summarised here, broken down according to period of installation. It can be seen that the early kilns life was somewhat shorter - reflecting the immature industry with low (and perhaps falling) capital costs allowing relatively quick replacement of obsolete plant. Mean longevity before WWI was 23.9 years. The inter-war years show the effect of mature technology and erratic growth - the mean longevity was 39.7 years. After WWII, a fall in longevity is caused by the industry being forced by the energy crisis to improve technology and abandon old plant. The mean for the period is 26.3 years (but some of the kilns in question are still operating, so the figure will probably increase by another two years).

The longevity of kilns is summarised here, broken down according to period of installation. It can be seen that the early kilns life was somewhat shorter - reflecting the immature industry with low (and perhaps falling) capital costs allowing relatively quick replacement of obsolete plant. Mean longevity before WWI was 23.9 years. The inter-war years show the effect of mature technology and erratic growth - the mean longevity was 39.7 years. After WWII, a fall in longevity is caused by the industry being forced by the energy crisis to improve technology and abandon old plant. The mean for the period is 26.3 years (but some of the kilns in question are still operating, so the figure will probably increase by another two years).

The kilns that operated for over 60 years were as follows:

| Kiln | Years |

|---|---|

| Sundon A2 | 67.0 |

| Wouldham B9 | 64.2 |

| Barrington A1 | 64.0 |

| Magheramorne A1 | 63.7 |

| Wilmington A1 | 62.8 |

| Wilmington A2 | 62.7 |

| Rhoose A1 | 62.0 |

| Swanscombe B1 | 61.7 |

| Chinnor A2 | 61.5 |

| Swanscombe B2 | 61.4 |

| Swanscombe B3 | 61.4 |

Note that in all the above, the "longevity" of a kiln is simply the period between the date when it was first lit and the date when it was last cooled down. Many long-lived kilns were used only intermittently, and their actual years of service were somewhat less than the stated figure. However, except for a few months lost due to lack of fuel in 1945, Sundon A2 ran flat out for pretty much its entire life: it exceeded 90% runtime in 34 of its 67 years. The performance of Swanscombe B1 is remarkable in that for more than half its life, it was making white clinker.

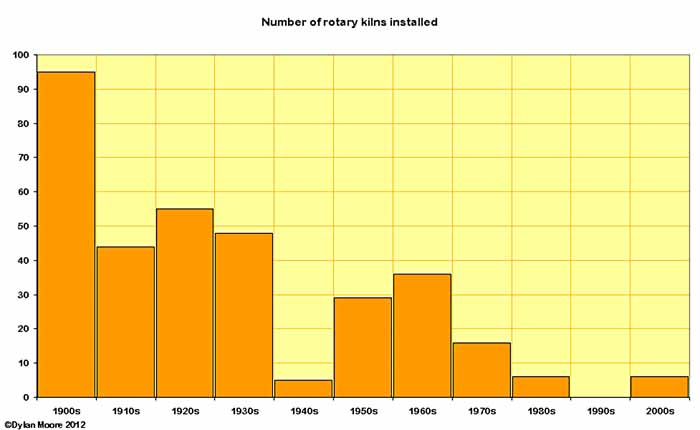

Here we focus on the numbers of new kilns commissioned. Because the data is erratic, a better picture emerges if the data is grouped into decades. The general downward trend reflects the increasing size of kilns. Superimposed on this are drops during the war decades, particularly during WWII and the ensuing period of the late 1940s when war debt was being paid off.

Here we focus on the numbers of new kilns commissioned. Because the data is erratic, a better picture emerges if the data is grouped into decades. The general downward trend reflects the increasing size of kilns. Superimposed on this are drops during the war decades, particularly during WWII and the ensuing period of the late 1940s when war debt was being paid off.

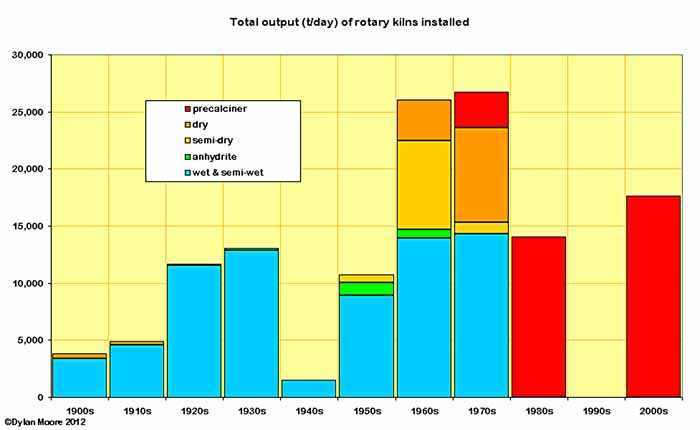

More detail can be derived by looking at capacity installed rather than numbers of kilns. The early dry process fades away by the 1920s. Anhydrite process first shows in the 1930s, then has its brief heyday in the 50s and 60s. Semi-dry Lepol kilns appear in the 50s, 60s and 70s, becoming the first serious challenge to wet process. Dry process suspension preheater and precalciner kilns begin in the 60s and from 1980 onwards only precalciners are installed. Most striking is the fact that, even in the 1970s, more than half the capacity installed was wet and semi-wet process.

More detail can be derived by looking at capacity installed rather than numbers of kilns. The early dry process fades away by the 1920s. Anhydrite process first shows in the 1930s, then has its brief heyday in the 50s and 60s. Semi-dry Lepol kilns appear in the 50s, 60s and 70s, becoming the first serious challenge to wet process. Dry process suspension preheater and precalciner kilns begin in the 60s and from 1980 onwards only precalciners are installed. Most striking is the fact that, even in the 1970s, more than half the capacity installed was wet and semi-wet process.

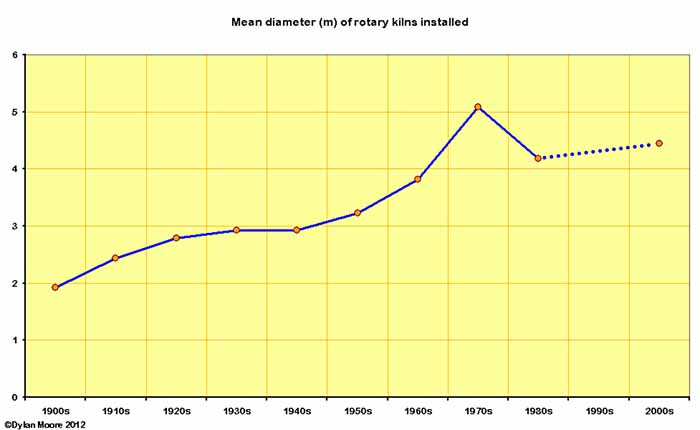

Here are a few trend lines regarding the size of kilns installed.

Energy Consumption

Energy consumed in the manufacture of clinker consists of:

- Kiln fuel

- Fuel used in raw material preparation

- Fuel used in the production of the motive power used

Kiln fuel. The thermal efficiency of kilns is to a large degree controlled by the process selected, and development has consisted largely of adoption of and refinement of new processes. The fate of heat developed on burning kiln fuel is comprehensively studied, and consists of:

- Chemical heat of reaction

- Heat in exhaust gases

- Heat in clinker

- Heat lost through the walls of the kiln system

A certain amount of energy is necessarily required in order to make clinker because the nett chemical reactions involved are endothermic. This is largely due to the fact that the CaO in the clinker is usually made almost entirely from calcium carbonate, and historical trends therefore mainly track the changes in clinker CaO content, as discussed under Product Quality.

The kiln system exhaust gases consist of evaporated water, carbon dioxide from decomposition of carbonates, combustion gases from the burning of fuel, and waste cooling air from coolers. The energy wastage associated with these is therefore a function both of the amount of each waste stream generated, and the temperature at which it is emitted. In addition to the "sensible heat" in waste streams emitted at above-ambient temperature, there is also the latent heat of evaporation of the water - of dominant importance in the wet process.

The heat in the clinker leaving the system is a function of the efficiency of the cooler.

The loss of heat to the kiln walls was a significant factor in early batch kilns, where the kiln, with a large thermal mass compared with the size of the charge, had to be heated up and cooled down in each operating cycle. The heat lost through the walls of modern kiln systems consists mainly of heat emitted by convection and radiation from the shell of the rotary kiln, mainly from its calcining and burning zones. Although various techniques have been attempted to insulate kilns, but no real permanent change has taken place in the temperature profiles of kiln shells after the initial period during which operators learned how to maintain a stable coating on the kiln interior, and heat loss has been reduced by increased kiln size and output, resulting in a reduction in the shell area : output ratio. This process is at its most developed in the precalciner kiln, which has a small kiln surface area and large output.

The developments leading to the present day situation are described in the following charts.

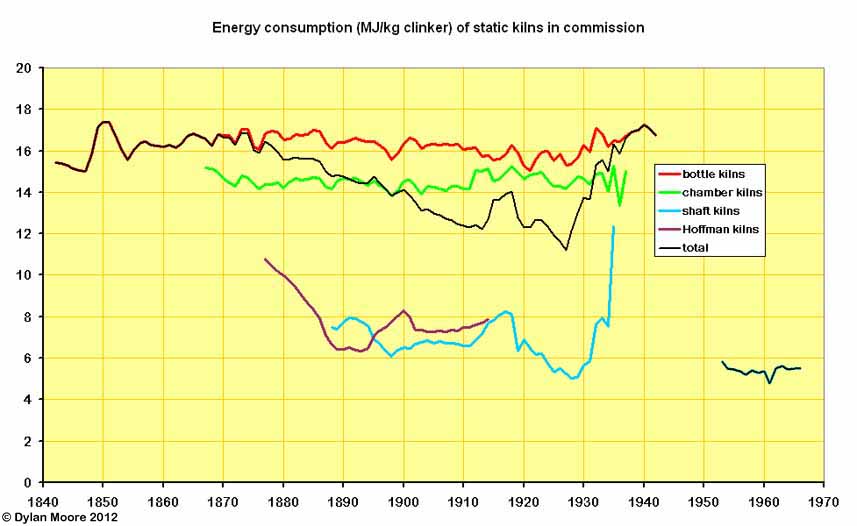

The energy consumption (always shown here including fuel used in raw material drying before the kiln stage) was fairly fixed for each process. Bottle kilns and chamber kilns were essentially the same, but for the former, additional fuel was needed to dry the raw material, and there was in fact a rising trend up to 1880 with the gradual adoption of the thick slurry process. Subsequent falls were due to the rise in the number of "dry process" bottle kilns. The energy consumption of both bottle and chamber kilns rose somewhat in the twentieth century as they became intermittently used reserve capacity. The average energy consumption for all types of static kiln fell gradually as the much more efficient Hoffman and shaft kilns were developed, but these went out of use before the others, and the last pre-war static kilns were in fact bottle kilns. The "black meal" kilns in the 1950s (there were only three, and they were tiny) were, ironically, Britain's most efficient until 1957.

The energy consumption (always shown here including fuel used in raw material drying before the kiln stage) was fairly fixed for each process. Bottle kilns and chamber kilns were essentially the same, but for the former, additional fuel was needed to dry the raw material, and there was in fact a rising trend up to 1880 with the gradual adoption of the thick slurry process. Subsequent falls were due to the rise in the number of "dry process" bottle kilns. The energy consumption of both bottle and chamber kilns rose somewhat in the twentieth century as they became intermittently used reserve capacity. The average energy consumption for all types of static kiln fell gradually as the much more efficient Hoffman and shaft kilns were developed, but these went out of use before the others, and the last pre-war static kilns were in fact bottle kilns. The "black meal" kilns in the 1950s (there were only three, and they were tiny) were, ironically, Britain's most efficient until 1957.

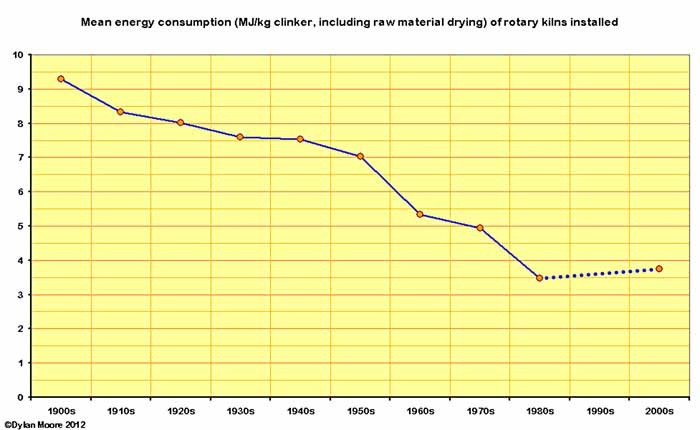

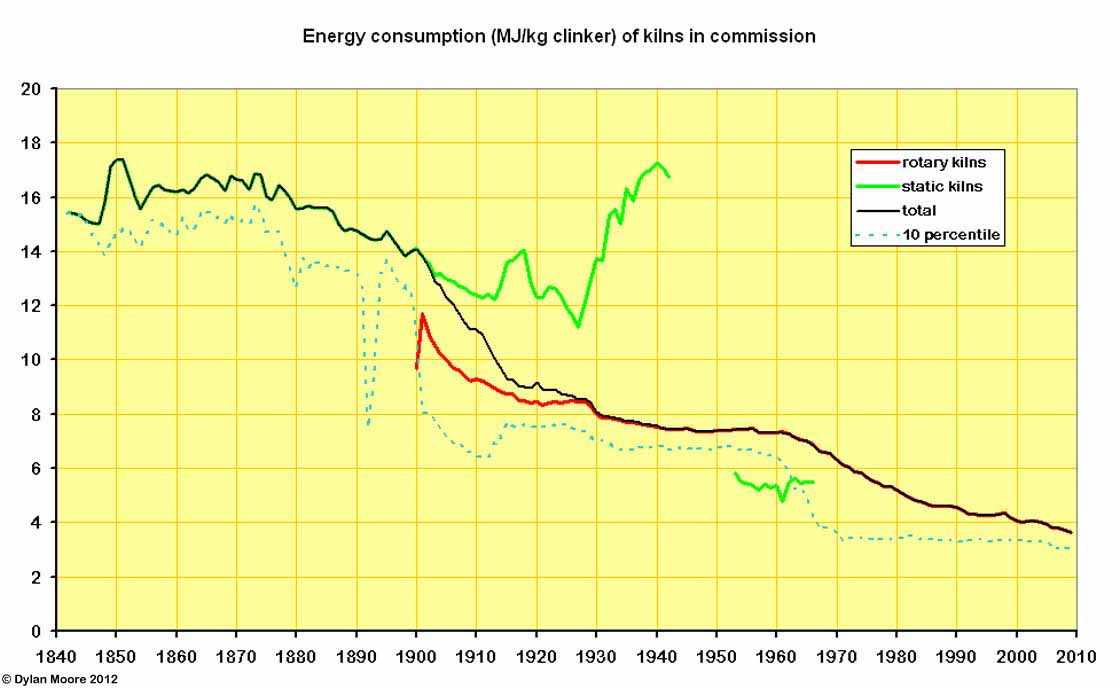

This shows the effect on energy consumption of the changeover to rotary production. The early rotary kilns were very inefficient, but their fuel consumption was moderated, mainly by simply lengthening them. Further improvements came in the form of better heat exchangers - chain systems and calcinators - in the 1930s, but the almost exclusively wet process kilns remained less fuel efficient than shaft kilns, and performance only began to improve with the installations of semi-dry and dry process kilns from 1960 onwards. Also shown is the "10 percentile" performance - i.e. the efficiency exceeded by the best 10% of capacity. The slow rate of adoption of available efficient processes is clear.

This shows the effect on energy consumption of the changeover to rotary production. The early rotary kilns were very inefficient, but their fuel consumption was moderated, mainly by simply lengthening them. Further improvements came in the form of better heat exchangers - chain systems and calcinators - in the 1930s, but the almost exclusively wet process kilns remained less fuel efficient than shaft kilns, and performance only began to improve with the installations of semi-dry and dry process kilns from 1960 onwards. Also shown is the "10 percentile" performance - i.e. the efficiency exceeded by the best 10% of capacity. The slow rate of adoption of available efficient processes is clear.

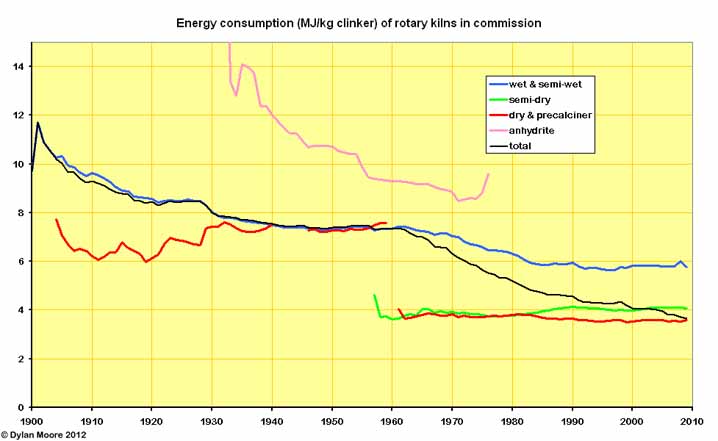

Until 1960, the history of rotary kiln fuel efficiency was essentially that of the wet process, which by 1960 had settled into a self-satisfied plateau. However, much more efficient slurry-based processes were possible and emerged during the following two decades. Mechanical removal of slurry water (semi-wet process) contributed somewhat to this, but the main thrust was in improved kiln heat exchange efficiency and making slurry with less water. However, the largest effect on efficiency has been the move to dry process.

Until 1960, the history of rotary kiln fuel efficiency was essentially that of the wet process, which by 1960 had settled into a self-satisfied plateau. However, much more efficient slurry-based processes were possible and emerged during the following two decades. Mechanical removal of slurry water (semi-wet process) contributed somewhat to this, but the main thrust was in improved kiln heat exchange efficiency and making slurry with less water. However, the largest effect on efficiency has been the move to dry process.

Fuel used in production of motive power. The motive power requirements of fixed plant (mainly raw mills, fuel mills and finish mills) are complex, and are treated in a separate article.

Emissions

Early open lime kilns emitted at ground level choking clouds of gases containing SO2 and CO2. Nonetheless, lime kilns were for a long time said to have a positive effect on the health of those living in the locality. This is perhaps because, in dank malarial areas like the Thames estuary, lime kilns attracted swarms of mosquitoes to a premature death . The health-giving properties of cement kilns were still being proclaimed by the industry well into the twentieth century. The cement industry was brought within the scope of the Alkali Act in 1881, for the curious reason that the Act covers the manufacture of “aluminous materials”. From then, the industry’s activities occupied increasing amounts of the Alkali Inspectorate’s time, and were the main preoccupation of the Inspectorate’s South-East region. Inspectors’ initial interest was in gaseous emissions such as HCl and H2S – their main concerns in the alkali industry – although the cement industry emits insignificant amounts of these.

Dust

The arrival of the rotary kiln finally gave the inspectors something to get their teeth into, because dust emission began to be a feature of the industry. The lazy plumes of static kilns carried little dust, and such dust nuisance as existed on early plants was from the manual packing of finished cement. But rotary kiln exhaust gases were normally heavily laden with dust, and the first generation kilns made no attempt at all to prevent it entering the atmosphere. While no standard existed for allowable dust emission quantities, inspectors started to put pressure on manufacturers to reduce the nuisance. By 1907, suppliers were already providing a minimal sort of dust control, generally based on the “wet bottom” principle. The kiln exhaust was directed downwards through a “U-tube” duct before reaching the stack. In the bottom of the duct, a layer of water or fresh slurry was maintained, and the entrained dust would, hopefully, impact upon the liquid surface. The resulting sludge would be discarded or added to the slurry feed. Such devices may have had some beneficial effect on kilns with natural draught, but kilns began to be installed with induced-draught fans from 1920 onwards, and an era began in which the cement-making districts were characterized by drifts of dust in streets and gardens.

The initial response by the Inspectorate was to insist on increased height of stacks. As early as 1873, I. C. Johnson had responded to public pressure by building the first 300 ft stack on Thames-side, but most stacks were much shorter. As a rule of thumb, the maximum concentration of dust fallout occurs at ten stack-heights from the stack base, and dust concentrations are inversely proportional to the cube of stack height. The insistence on high (and expensive) stacks persisted long after kiln dust emissions had ceased to be significant.

Effective methods of removing dust from kiln exhaust gases began with electrostatic precipitators. These became available in 1929, and Johnsons A7 and A8 were retrofitted with them in 1933, although the Billingham anhydrite process kilns had “mist” precipitators (already common in the chemical industry) from the outset in 1930. From 1937, they were standard on new installations. The last plant to operate without a precipitator was Lewes, which finally installed one in 1980, a year before its closure. Of recent years, tightening of standards on particulate emission and the proliferation of hard-to-precipitate high alkali dusts has led to consideration of the use of bag filters, which are now available for fairly high temperature duty. These also get around a serious problem with otherwise highly efficient precipitators. If kiln gases containing a large amount of carbon monoxide enter an energised precipitator, an explosion can result. Because of this, precipitators are automatically cut out whenever a “spike” of CO is produced, resulting immediately in near-zero dust capture, this being particularly a feature of kiln light-ups. However, with precipitators and high stacks, kiln dust emissions in general are minimal, and dust nuisance, which continued for many years to be a feature, mainly resulted from “low-level” emissions from multiple small leakage points around plants, and, particularly, from the digging and transport of clinker kept in open stockpiles. The latter practice is now prohibited.

Gas Emissions

Obsession with, and high expenditure on, the control of kiln dust emissions tended to divert attention from other significant emissions. In more recent years attention has focussed on sulfur dioxide (causing “acid rain”) and nitric oxide (causing “photochemical smog” in some conditions).

SO2 has always been emitted by cement kilns, as a result of the sulfur content of the fuel and of the raw material. In the presence of excess air in the kiln, the sulfur can be captured by the rawmix or the clinker in the form of sulfate, but if oxygen is restricted, SO2 can escape. Such data as remains on the clinker from bottle kilns and chamber kilns indicates that they absorbed sulfur fairly efficiently, capturing 60-80%, because of the good contact between the gas and the raw material. High-sulfur raw materials were avoided, but large amounts of fuel were used, and using typical data, a 30% emission implies around 4 kg of SO2 emitted per tonne of clinker made. A large change took place with the arrival of rotary kilns. The early kilns had a high fuel usage, tended to be run in reducing conditions, and had little opportunity for SO2 to be absorbed, so a kiln with an energy input of 10 MJ/kg might emit 6 kg/t. However this rate declined to below 4 kg/t as the fuel efficiency of kilns was improved and better gas/feed contact was obtained with chain systems. The arrival of dry process kilns with preheaters, gas/feed contact was greatly increased, and although higher-sulfur materials tended to be used by such kilns, emissions could be lower than 2 kg/t. Nevertheless some sites had very high sulfur raw materials and/or fuel, and efforts have been made to further reduce the emission rate. SO2 scrubbers have been fitted so far at Ribblesdale and Dunbar. Interestingly, in reacting the SO2 with calcium carbonate, they cause a corresponding increase in CO2 emission.

Nitrogen oxides (and specifically nitric oxide NO) are found to be formed by two distinct mechanisms:

- organic nitrogen present in the fuel, although mostly producing nitrogen in the combustion gas, produces a certain amount of "fuel" NO at low temperature.

- atmospheric nitrogen reacts with oxygen to form "thermal" NO at very high temperatures (above 1800°C).

Since most fuel contains low levels of nitrogen, the low-temperature mechanism results in a low background level of NO. The nature of combustion in static kilns, in which the fuel is in close contact with the feed, means that gas temperature never gets high, and thermal NO was probably not produced. However, in the rotary kiln, the hot flame produces large amounts of thermal NO, and the cement industry was probably the most important emitter of NO in the first half of the twentieth century. However, NO emissions became a concern when car ownership rose in the post-war period, and cars became responsible for the large majority of emissions. From 1970 onwards, driven originally by USA legislation, various techniques have been employed to reduce NO emission. Precalciner kilns have the advantage that 60% of the combustion air by-passes the hot burning zone, and the ability to control the individual kiln stages means that techniques such as "staged combustion" can be used to greatly reduce NO emissions.

Carbon Dioxide

Throughout the entire history of the industry, carbon dioxide emission has been the “elephant in the room”, not really considered at all until around 1990. A Google search nowadays finds a myriad of references to cement kilns primarily as the major industrial source (after power generation) of carbon dioxide. Reduction of this emission is now the major technical preoccupation of the industry.

A trivial approach to reduction of CO2 emission per ton of cement is simply to put less clinker in the finished product. Small additions of fillers across the board reduce clinker content by 5% or so. Materials that can be added in larger quantities, such as pozzolans and granulated blast furnace slag, are increasingly used although at high replacement levels the resulting cement is of limited application because of low reactivity.

The carbon dioxide produced on making clinker itself has in fact progressively diminished during the twentieth century (although prior to 1900 there was a slight upward trend). There are four distinct sources of CO2 in clinker manufacture:

- Carbonate decomposition

- Kiln fuel combustion

- Fuel used in raw material preparation

- Fuel used in the production of the electric power used

CO2 from carbonate decomposition. Insofar as the calcium oxide in clinker is supplied from calcium carbonate in the raw mix, this has been a fairly invariant feature of the process. In fact, virtually all the calcium available in nature exists as carbonate, and in most raw mixes only a small fraction of a percent of the calcium is present in any other form. The potential for the use of other sources of calcium is limited: wollastonite (CaSiO3) has been considered, but it occurs far too rarely to be a useful replacement for limestone. Blastfurnace slags can be – and are – used, but the supply of slag of suitable chemistry is limited, and in any case, slag is made originally by decomposing limestone. Leaving aside clinker made by the anhydrite process and by inclusion of slag in the rawmix, the “inherent” carbon dioxide emission per unit mass of clinker has increased gradually historically to a plateau level due to the rise in CaO content discussed above under Product Performance. There is some likelihood that this figure can be reduced to the earlier level, or slightly lower, by the production of cements in which alite is replaced with “reactive belite”.

A significant alternative source of calcium is calcium sulfate, used in the anhydrite process from 1931 to 1976. Anhydrite process rawmixes contained small amounts of calcium carbonate adventitiously present in the anhydrite, and the inherent carbon dioxide associated with the clinker was 0.019 kg/kg for Billingham and 0.023 for Widnes. I have been unable to find a value for Whitehaven. A new anhydrite process is now being proposed, using multiple calciners, to produce a more reliable product with much better fuel efficiency than the old process. However, the market for sulfuric acid is much smaller than that for cement, and the problem of distributing acid from a single large plant would again arise.

CO2 from kiln fuel. This item has diminished considerably in the twentieth century with improvements in kiln technology leading to reduced fuel usage. This trend is described in the section on energy consumption above. The other factor involved is the amount of CO2 produced on burning individual fuels. Since dilution of a fuel with non-combustible material diminishes its CO2 production per kg, but also increases the amount of fuel required, it is best to assess CO2 production as kilograms of CO2 per gigajoule of nett combustion energy. This is to be found in the fuel data sheets.

For static kilns, the predominant fuel was coke, although anthracite was occasionally used, and some shaft kilns – notably the Dietzsch kiln – were designed to use bituminous coal. Rotary kilns, when first installed in Britain, exclusively used bituminous coal, and continued to do so without exception until the late 1950s, when the delivered price of heavy fuel Oil (per GJ) began to fall below that of coal. This was particularly associated with a policy of aggressively selling oil to the West by the USSR. Plants capable of getting bulk deliveries (mainly close to sea transport) converted completely to oil, and several new plants (Plymstock, Cookstown, Platin) were built without coal facilities. The price advantage started to fade in the late 1960s, and disappeared with the 1973 oil crisis. With the development of an international cheap coal market, oil is now much more expensive. Gas began to be used by a number of plants at around the time that Britain converted to North Sea gas supply, manufacturers typically obtaining favourable rates by negotiating interruptible supply agreements. As demand for gas rose, the price rose rapidly and gas became excessively expensive from the mid-1970s.

A return to the use of coal corresponded with de-regulation of coal prices and the near-extinction of the British coal industry in the 1980s, but because of the general upward trend in the international price of all fossil fuels, there was a simultaneous trend towards the use of non-fossil fuels obtainable as by-products or waste streams. By far the most important of these was petroleum coke (petcoke) obtained from oil refining, and this has become a mainstay of the industry. From the late 1970s, the use of domestic waste was explored, and continues. Other significant sources are waste oils and worn-out rubber tyres. Historically, the move towards using waste streams as fuels has been prompted largely by their low price. As means of mitigating CO2, their status is not clear-cut, because many of them derive ultimately from fossil fuels. "Sustainable" fuels, deriving their energy from recent photosynthesis, are various forms of biomass, the rather limited natural rubber components of tyre rubber, and the paper and cellulose components of domestic waste.

CO2 from raw material preparation. A small component of clinker's CO2 footprint has been from fuels burned in the process of digging raw materials out of the ground and transporting them to the plant site. This initially involved only human labour, but gradual mechanisation increased the use of fuels, which probably peaked with the steam-powered quarries of the 1920s, peaking at an average 0.0005 kg/kg. Since then, more efficient vehicles and the economy of scale have reduced this figure to perhaps 0.0002.

Much more significant than these tiny quantities is the fuel used in drying the raw material before it reaches the kiln. This naturally depends upon the nature of the raw materials and the type of process used. In the case of chamber kilns and wet process rotary kilns (where the drying is done in the kiln) and suspension preheater and precalciner kilns (where waste gas is used to do the drying), this quantity is zero. But for other processes it could be substantial. In kg CO2 per kg clinker, it reached 0.32 for "double burning" bottle kilns which involved a preliminary calcination stage. 0.064 was typical for "dry process" bottle kilns. The most numerous kilns requiring pre-drying were wet process bottle kilns. These had a ratio 0.10 kg/kg when slurry was largely dried by sedimentation up to the late 1850s: the ratio then rose to 0.15 when drying flats were used for semi-dried settled slurry, and during the period 1870-1885 when the "thick slurry" process was gradually adopted, the ratio rose to 0.20, where it stayed. In modern times, the most significant process requiring pre-drying was the Lepol process, where a ratio of 0.029 was typical.

CO2 from production of motive power. The motive power requirements of fixed plant (mainly raw mills, fuel mills and finish mills) and the resulting carbon dioxide emissions are complex, and are treated in a separate article.

This page remains in development.